Machine Learning and CoreML

“WHAT THE HECK?! HOW CAN I UNLOCK MY PHONE WITH MY FACE?!”

Those were the words that came out of my mouth in October of 2017, as I pored over the user manual for my new iPhone X. It wasn’t all hyperbole, either — I really wanted to know, and I ended up dedicating quite a bit of time to learning about the science behind Apple’s new facial recognition technology. In the end, the answer to my question boiled down to two words — machine learning.

What is machine learning?

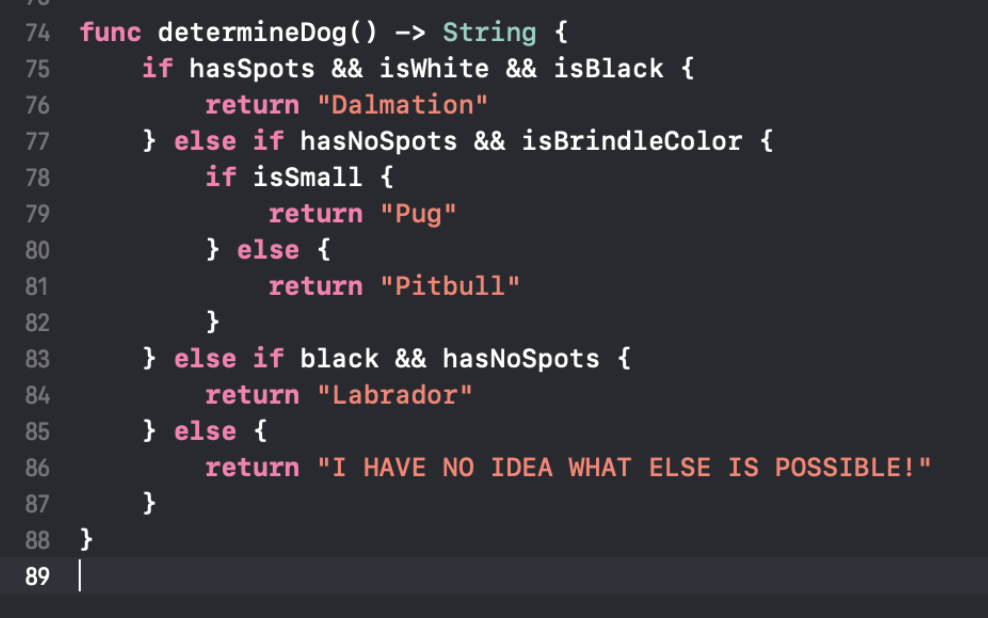

Imagine you work at a humane society, and your job is to classify the breeds of the dogs that are brought in. One day, someone comes by and drops off a generic-looking mutt — you don’t know anything about this dog or its family history. You decide to see if you can write a computer program to help you narrow the options. You start with the program below:

After a while, though, you realize that there are an infinite set of characteristics that could influence your final classification. The standard program is not going to work here — but a machine learning approach might.

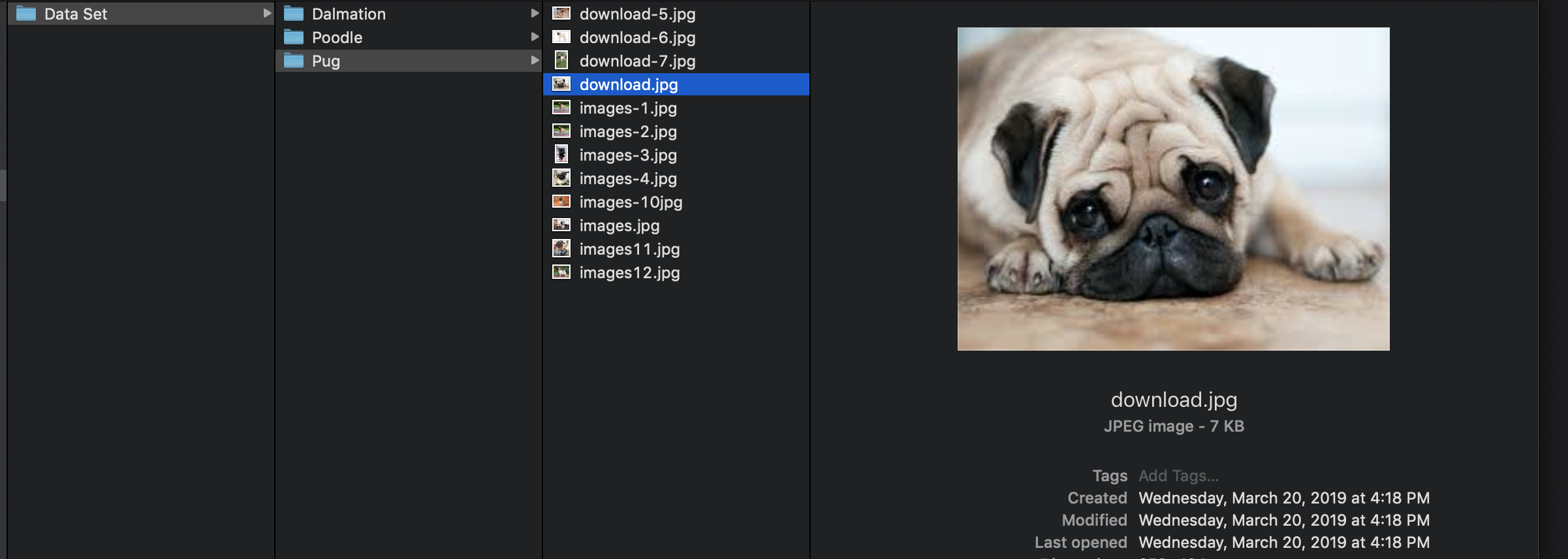

Instead of jumping straight into developing a program, the machine learning approach to this problem starts with a data set — images of thousands of dogs, each labeled with a breed:

Given this data set, your model is able to find which breed of dog looks most similar to the mystery dog. If the photo looks most similar to the images in the “pug” folder, the model will inform you that this mystery dog is a “pug”. Then – and this is the “learning” part – your mystery dog will be added to the ‘Pug’ data set and can be used in your model the next time an unknown mutt is brought into your humane society.

Over time, your model will get better and better at choosing the right breed on the first try. It will also acquire more data, as with every correct identification, it adds another dog photo to its database. Google Images actually follows this exact process — if you search for an image of a “goat”, and then click on a picture of a goat, you’re giving feedback to Google about what a goat looks like, thus improving its model for future searchers.

How does your iPhone use machine learning?

Apple uses machine learning to build a facial recognition model so precise, they’re willing to bet your phone’s security on it. But facial recognition is actually only the latest in a series of machine learning models built into your iPhone.

For example, iMessages contains a machine learning model that predicts the next word, phrase, or emoji you’ll type. Below, you can see that the iMessage suggestion bar is predicting I’ll want to insert a bowling emoji after the phrase “Want to go bowling”. This suggestion is based on analysis of a huge database of text — and my decision to insert or not insert the emoji will add yet another data point to aid in future predictions.

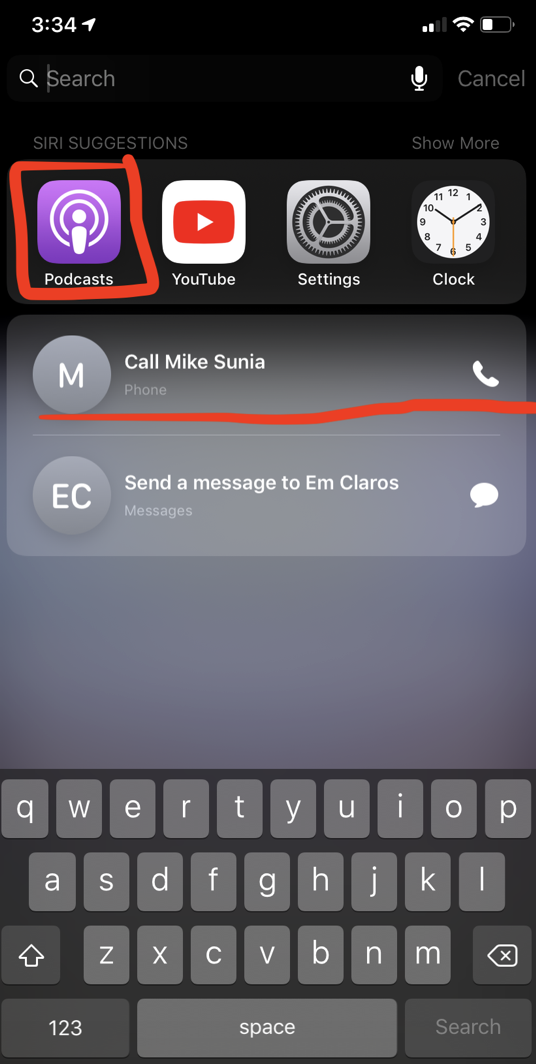

iPhone’s “personal assistant”, Siri, also relies heavily on machine learning. In the example below, Siri has used my past behavior to suggest some things I might want to do at 3:34 on Friday:

Can I use machine learning in my own iPhone app?

Well…maybe. You’re going to have to grapple with the three major issues that all developers run into when using machine learning:

- Correctness. How do you know if your model is giving good answers? In the example above, if you work in a humane society that takes in only purebred dogs, you’ll probably find it pretty easy to judge whether the model was right or wrong. But if the model says it is 42% confident your breed is a pug and 41% confident your breed is a labrador, are you going to be able to give it appropriate feedback?

- Performance. Machine learning models are processing enormous datasets, and if they’re not designed carefully, they can take hours or even days to run. That might not be a big issue for dog breed classification, but in other contexts — such as unlocking your iPhone — it could be a big problem.

- Energy efficiency. Similar to the “performance” issue, a huge, and poorly-optimized machine learning model can eat up a huge amount of energy cranking through big datasets. Such a model might need more energy than is typically available within your iPhone’s battery.

Fortunately, Apple has made big strides toward solving these problems in their own models, and they’ve generously shared some of their solutions with the developer community. CoreML is a publicly-available Apple framework that “allows developers to integrate trained machine learning models into their own iPhone apps.”

According to Apple, “A trained model is the result of applying a machine learning algorithm to a set of training data…For example, a model that’s been trained on a region’s historical house prices may be able to predict a house’s price when given the number of bedrooms and bathrooms.” Because CoreML models are already trained, they’re much more likely to produce correct outputs; and because Apple has invested in optimizing them for the iPhone, they’re highly performant and energy-efficient, too.

In conclusion — machine learning offers solutions to a number of problems that standard computer programs can’t solve. Building a machine learning model is by no means easy, but if you’re developing for the iPhone, I highly recommend taking advantage of trained, optimized frameworks such as CoreML and the provided models within.