Parallel Computing with a GPU

Graphic Processor Units are becoming more and more important in recent years and are spreading into many different fields, some of which include: computational finance, defense and intelligence, machine learning, fluid dynamics, structural mechanics, electronic biology, physics, chemistry, numerical analysis and security. There are many reasons why a person should learn how to write code for a GPU. For example, GPU’s have been used to successfully decrypt passwords (add reference) in record time, a 25-GPU cluster is able to crack any standard Windows password (95^8 combinations) in less than 6 hours. Other applications of the GPU include data mining for Bitcoin and also applying machine learning for sentimental analysis on tweets.

In 2003 the National Center for Supercomputing Applications had already built a cluster using 70 PlayStation 2 < $50k

In November 2010 the Air Force Research Laboratory created a powerful supercomputer by connecting together 1,760 Sony PS3s

GPU’s have also been used for building clusters, in 2003 the National Center for Supercomputing Applications configured 70 PlayStation 2’s to create one of the top 500 clusters in the world using just $50,000. Following their genius example, in November 2010 the Air Force Research Laboratory created a powerful supercomputer by connecting together 1,760 Sony PS3s.

But, let’s take a step back, what is a GPU?

The first graphic cards were developed in the seventies when some arcade game companies decided to implement a dedicated hardware specialized in the graphics. In 1979, Namco Galaxian used specialized graphics hardware to support RGB color, multi-colored sprites and tilemap backgrounds. A few years later, the Commodore Amiga developed the Video Display Controller supporting line draw, area fill and included a type processor, which accelerated the movement, manipulation and combination of multiple arbitrary bitmaps.

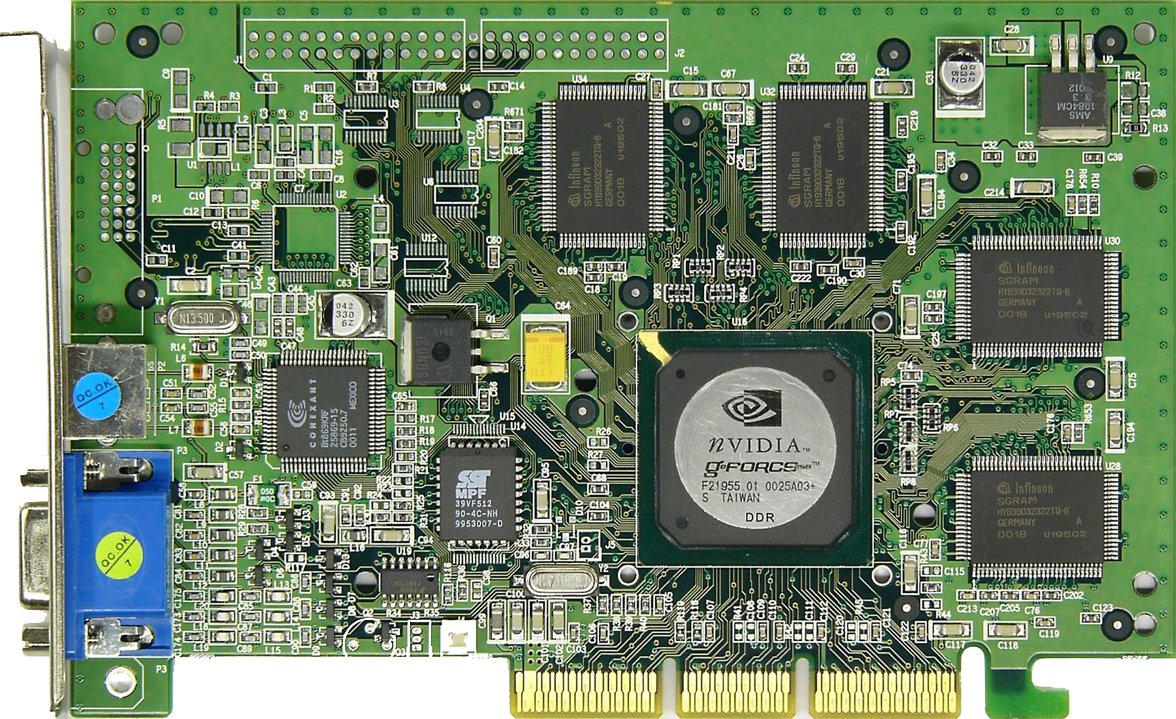

On October 11, 1999, the California based company Nvidia popularized the term GPU by marketing the GeForce 256 as “the world’s first ‘GPU,’ a single-chip processor with integrated transform lighting, triangle setup/clipping and rendering engines that are capable of processing a minimum of 10 million polygons per second.”

A GPU is a specific piece of hardware that helps the Central Processing Unit complete certain tasks. In the early days of computing, graphic processing was performed solely by the CPU, however, in the last twenty years, more and more tasks have been moved, first to the VDC, then on to the GPU. The kind of work that the GPU completes is highly parallel; it controls every single pixel, polygon and effect that displays on the user’s screen. This key feature is clearly represented in the diagram below.

While the CPU is able to perform powerful and complex sequential calculations, the GPU is composed of many small simple processors (arithmetic logic units) allowing it to implement highly parallel functions.

In the picture below there is a screen shot of GPU characteristics of a retina 15 MacBook Pro. While the CPU has 4 core and several megabytes of memory, the GPU contains 100’s of cores and limited memory.

Before overestimating the power of the GPU, it’s important to note one crucial limitation. Not everything can be parallelized, as Amdahl’s law argues, “the speedup of a program using multiple processors in parallel computing is limited by the time needed for the sequential fractions of the program.”

Amdahl’s law is the reason why, even with the best GPU computer, we can’t have infinite speed and power

CUDA and the NCAA tournament

The most popular framework for GPU programming is called Cuda from Nvidia. In the picture below, there is an abstract representation of the GPU that Nvidia made for developers to perceive its structure.

In every device there are several grids, which contain several blocks. In every block there are many threads, meaning single elementary processes can be executed. To maximize the efficiency of the computation, it’s important to reduce the number of memory transfers between the GPU and the external memory.

My goal was to perform a simulation in order to predict the winner of the NCAA March Madness Basketball Tournament. My strategy was to use the Monte Carlo method, as explained in my previous blog post, to calculate each team’s odds of winning the tournament. The simple model assumed an estimate of an “offensive power” and a “defensive power” of each team. I extrapolated these values from the stats in kenpom.com. (I used kenpom.com to successfully extrapolate the values shown/ these values/ the given values. (pick the one you prefer)

1) The algorithm is shown below. A match is simulated in the following way.

- For each team, the statistical data is used to estimate the average of number of points scored by a given team. The average scored by team A to the team B is proportional to the offensive power of A divided by the defensive power of B.

- From this value, the actual score is generated as a random number picked from the normal distribution center in that value. Since we are dealing with a simplified model, I assumed the variance to be a constant value. This process is then applied to every match in the tournament.

- The tournament is repeated thousands of times resulting in the list of wins for every team.

Numpy, Numba and NumbaPro

One reason why Python has become popular in the scientific community is because of Numpy, a math framework that allows a function to have a kernel (input) of tensors, matrices and vectors instead of a simple collection of data. This aspect is called Array Oriented Computing. Other than expressing domain knowledge directly in arrays, AOC takes full advantage of parallelism and accelerators.

Numba uses the LLVM library to compile python code and to optimize regular code into AOC. The compilation is then performed using decorators (see here for more details).

Why is this relevant to GPU computing? In 2012 Continuus Integration released an advanced version of Numba called NumbaPro, which allows Numba code to run on NVidia graphic cards.

The developer still needs to be aware of the fact that the code is running on the GPU, to optimize the performances reducing memory transfers and synchronizing threads correctly. However, most of the code is identical to the CPU version.

Referring back to the algorithm, the whole tournament can now be run on the GPU using the vectorize decorator. There are just two memory transfers (RAM <-> GPU): before the tournament is started and after the tournament is completed.

One important note is that the “tournament” process should be sequential because the initial step needs to be performed and completed before the second step can start, and so on for all the stages of the tournament. To avoid this sequential mishap, the algorithm I designed simulates every potential match (see picture). This process does in fact simulate ~2,000 matches instead of ~200 but it calculates them all at the same time satisfying Amdahl’s law.

Another parallelization improvement is when generating the Gaussian distribution. A bundle of uniform random numbers, is converted into normally distributed ones. This process also happens one time, parallelizing this calculation.

The code used in the simulation can be found here.

In the 10k simulation on my MacBook Pro I obtained more than a 200% increase of speed which is a highly satisfying result considering the low-performance of the built-in GPU. Even further, this result could be enhanced greatly if the GPU was not built-in and was able to store more memory.

2 Comments